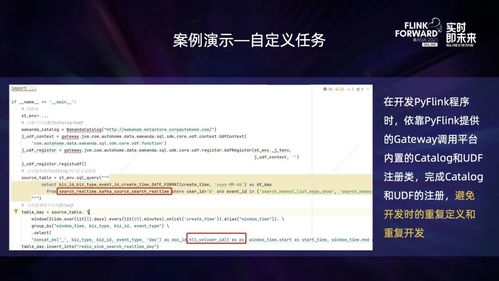

flink编程语言

Title: A Comprehensive Guide to Flink Stream Processing

Apache Flink has emerged as a powerful framework for stream processing applications, offering high throughput, low latency, and fault tolerance. Whether you're a beginner or an experienced developer, understanding Flink's core concepts and programming model is crucial for building robust and scalable stream processing pipelines. Let's delve into the world of Flink computation programming.

Introduction to Flink

Apache Flink is an opensource stream processing framework known for its capability to process largescale data streams with low latency and high throughput. It supports both batch and stream processing, making it suitable for a wide range of use cases, including realtime analytics, eventdriven applications, and continuous data processing.

Core Concepts

1. Stream Processing

In Flink, data is processed as continuous streams, allowing for realtime analysis and computation. Stream processing enables the handling of infinite datasets by processing data as it arrives, rather than storing it and processing it later.

2. DataFlow Graph

Flink programs are represented as directed acyclic graphs (DAGs), where nodes represent operations (such as mapping, filtering, aggregating) and edges represent data streams. This abstraction allows Flink to optimize the execution of data transformations.

3. Operators

Operators are the building blocks of Flink programs. They perform various transformations on data streams, such as `map`, `filter`, `reduce`, `join`, and `window`.

4. State Management

Flink provides builtin support for managing stateful computations in stream processing applications. State can be maintained across events within a specified window or time interval, enabling tasks like sessionization and aggregation.

Flink APIs

1. DataStream API

The DataStream API is designed for building continuous data processing applications. It offers a fluent and expressive API for defining transformations on data streams using operators like `map`, `filter`, `flatMap`, `window`, and `aggregate`.

2. DataSet API

The DataSet API is used for batch processing in Flink. It provides similar functionality to the DataStream API but operates on bounded datasets rather than unbounded streams.

3. Table API & SQL

Flink also supports querying and processing data using SQL queries and relational operations through the Table API. This provides a familiar interface for users familiar with SQL.

Key Components

1. JobManager

The JobManager is responsible for accepting Flink programs, creating execution plans (DAGs), and coordinating the execution of tasks across the cluster.

2. TaskManager

TaskManagers are responsible for executing individual tasks assigned by the JobManager. They manage the execution of operators and handle data exchange between tasks.

3. State Backend

State backends are responsible for storing and managing the state of Flink applications. Flink supports various state backends, including inmemory, filesystem, and distributed storage systems like Apache Hadoop's HDFS and Apache RocksDB.

Deployment Options

1. Standalone Deployment

In standalone mode, Flink can be deployed on a single machine or a cluster of machines without relying on external resource managers.

2. YARN/Mesos/Kubernetes

Flink can also be deployed on resource managers like YARN, Mesos, or Kubernetes, allowing for dynamic resource allocation and management in a distributed environment.

Best Practices

1. Optimize State Usage

Minimize the use of stateful operations and choose appropriate state backends based on your application's requirements to optimize performance.

2. Ensure Fault Tolerance

Utilize Flink's builtin fault tolerance mechanisms, such as checkpointing and state snapshots, to ensure data integrity and fault recovery in the event of failures.

3. Scale Horizontally

Design your Flink applications to scale horizontally by partitioning data and distributing tasks across multiple TaskManagers for improved throughput and fault tolerance.

Conclusion

Apache Flink offers a powerful platform for building realtime stream processing applications with high throughput, low latency, and fault tolerance. By understanding its core concepts, APIs, and deployment options, developers can leverage Flink to implement robust and scalable data processing pipelines for various use cases.

This guide provides a solid foundation for getting started with Flink computation programming, but there's much more to explore as you dive deeper into the world of stream processing.